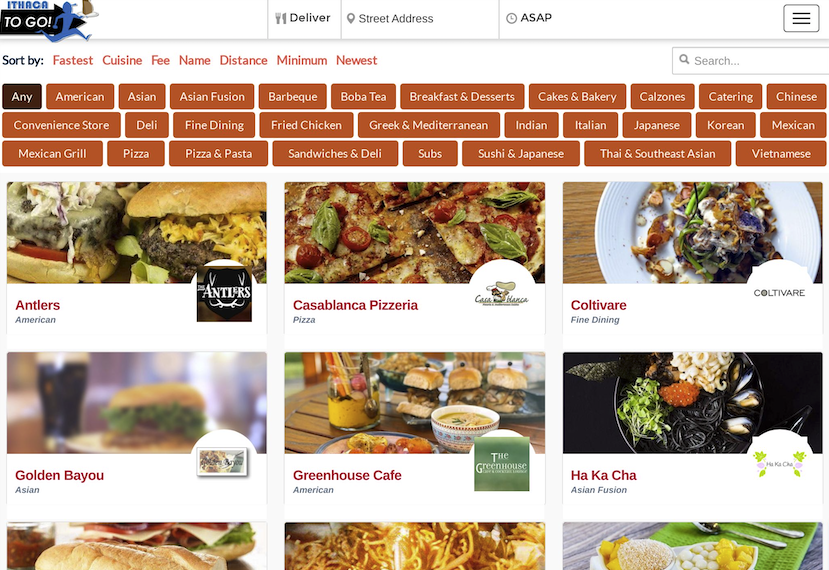

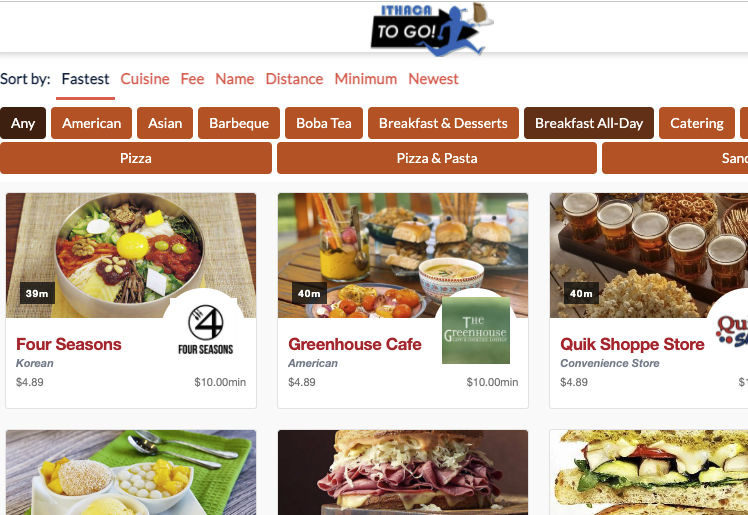

https://www.ithacatogo.com/restaurants?collapsable=1

Lets try this command on my local computer

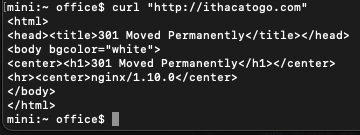

curl "http://ithacatogo.com"

https://stackabuse.com/follow-redirects-in-curl/

curl -L "http://ithacatogo.com"

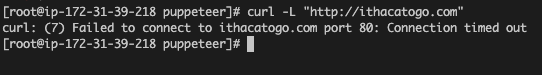

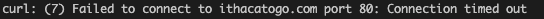

Now lets try this command on a EC2 server

Does’nt seem to work on my server, the website below got me started on certain steps websites follow to stop sites from scraping their content

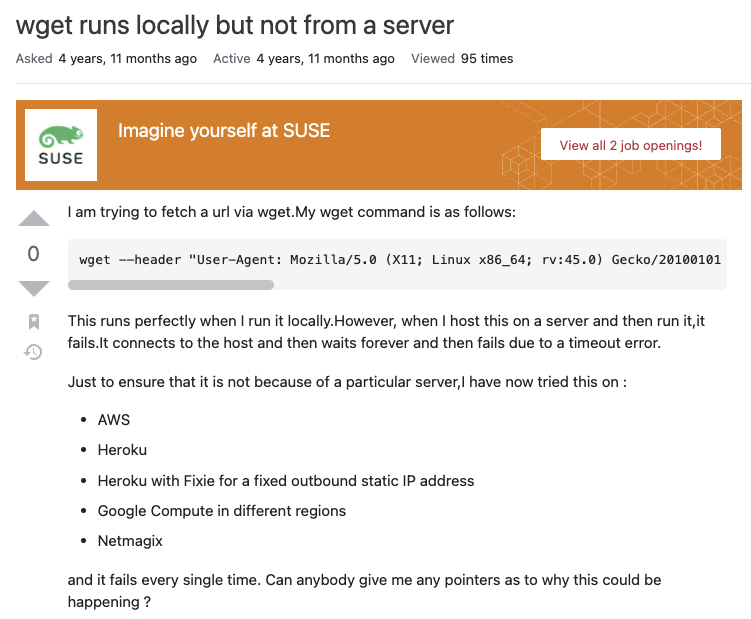

I searched in google “runs locally but not server” on google

https://stackoverflow.com/questions/41040155/wget-runs-locally-but-not-from-a-server

same problem

https://www.webscrapingapi.com/web-scraping-rotating-proxies/

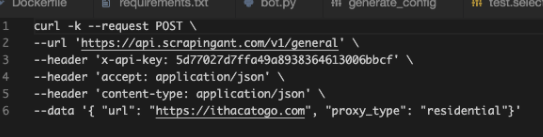

I tried this out

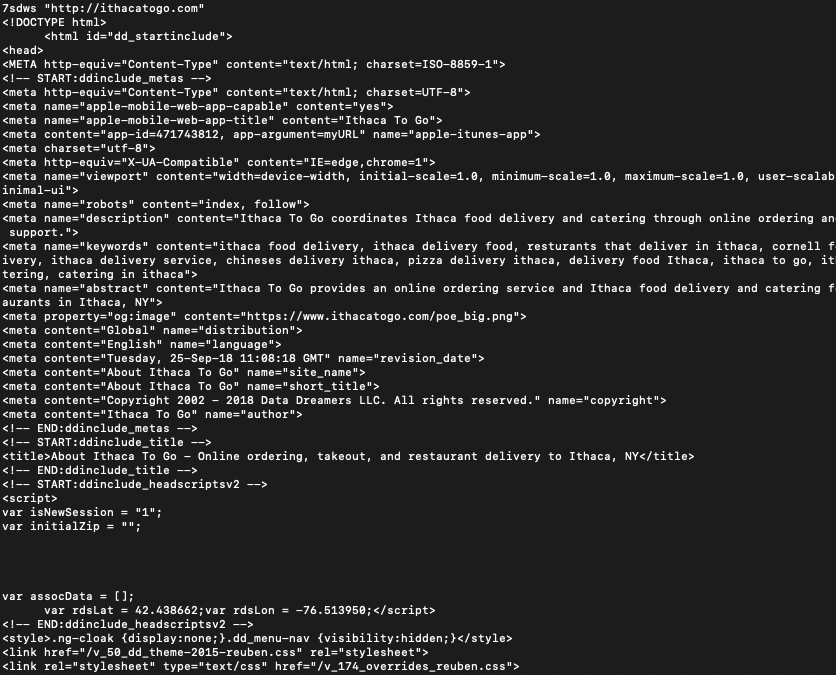

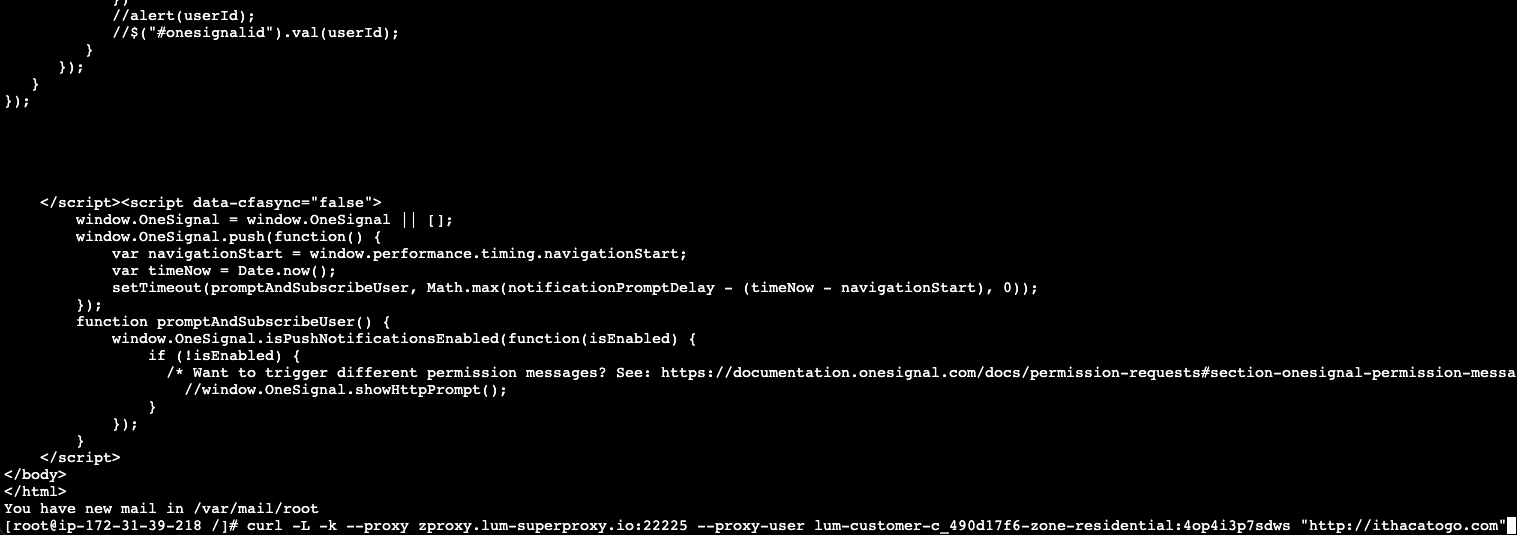

using this command

this worked!!!, but why did it work. what was going on underneath the hood. why cant i just simply crawl the page the same way I can locally?

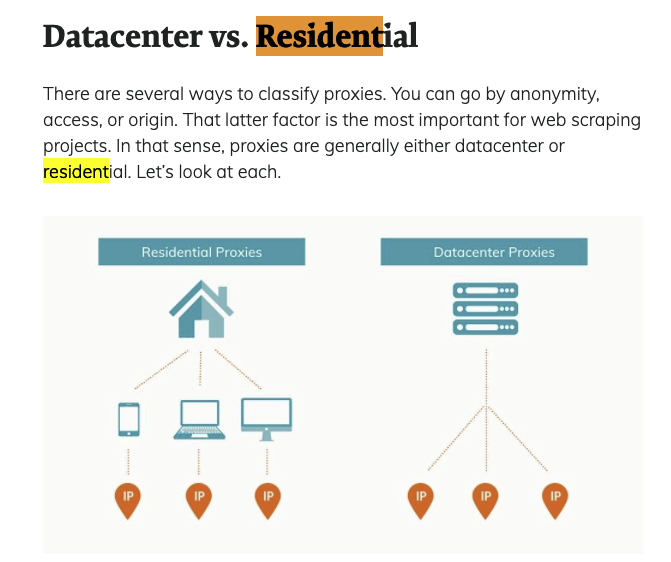

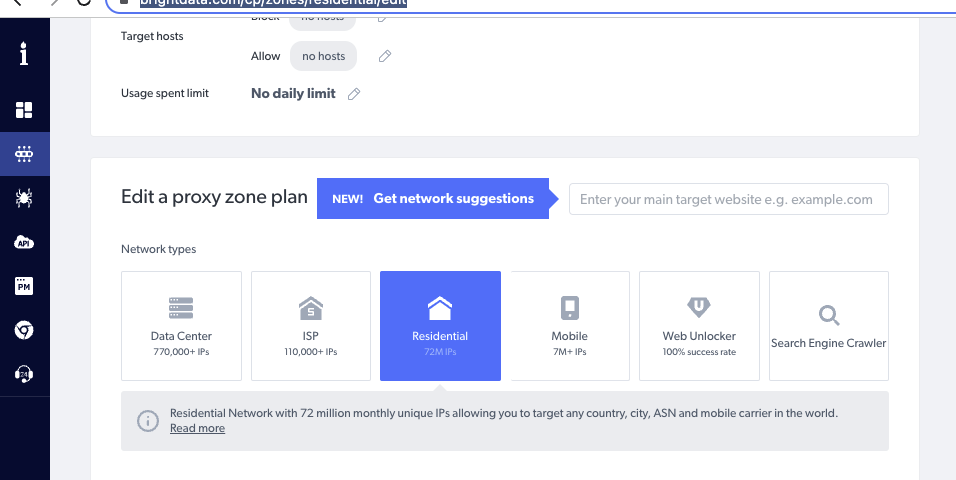

Commonly when websites are scraped, their done so using proxy servers. So I looked online for good residential ip providers.

https://brightdata.com/cp/zones/residential/edit

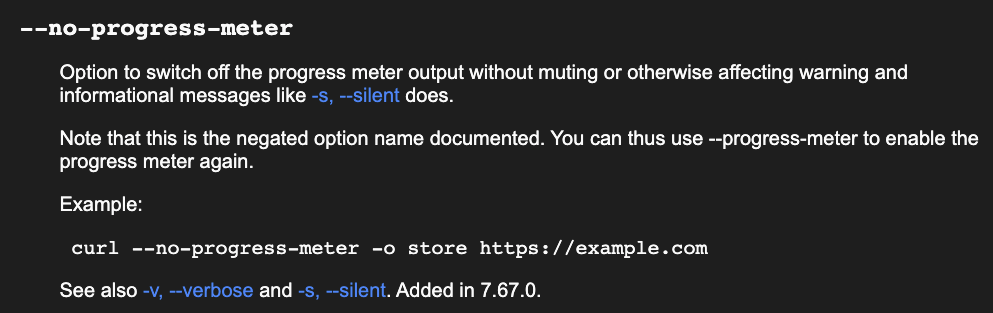

curl -s -L -k --proxy zproxy.lum-superproxy.io:22225 --proxy-user lum-customer-c_490d17f6-zone-residential:4op4i3p7sdws "http://ithacatogo.com-s flag was important in getting this to work, I went days thinking that residential ips are a sham. but when using session manager and using the above command without the -s flag it worked

but when i used it in theia it did’nt.

not sure why. not sure what made me want to try it on session manager but it works when i do so. but does’nt in my theia env. but if i use the -s flag in my theia env, it works

lets get this to work in puppeteer using credentials from brightdata

https://brightdata.com/integration/puppeteer

const puppeteer = require("puppeteer");

const fs = require("fs");

let browser;

let page;

//----------------------------------------------------------------

async function init() {

var browser = await puppeteer.launch({

args: [

// Required for Docker version of Puppeteer

"--no-sandbox",

"--disable-setuid-sandbox",

// This will write shared memory files into /tmp instead of /dev/shm,

// because Docker’s default for /dev/shm is 64MB

"--disable-dev-shm-usage",

//'--proxy zproxy.lum-superproxy.io:22225',

'--proxy-server=zproxy.lum-superproxy.io:22225',

//"--proxy-user lum-customer-c_490d17f6-zone-residential:4op4i3p7sdws"

],

});

//------------------------------------

//output was coming out truncated for some reason, had to add this to fix

process.stdout._handle.setBlocking(true);

//------------------------------------

const browserVersion = await browser.version();

//------------------------------------

page = await browser.newPage();

await page.authenticate({

username: "lum-customer-c_490d17f6-zone-residential",

password: "4op4i3p7sdws"

});

await page.setDefaultNavigationTimeout(0);

const response = await page.goto(process.argv[2]);

//await page.waitForTimeout(8000);

//await page.setViewport({width: 1024, height: 1367, deviceScaleFactor:2});

//await page.waitForTimeout(1000);

await page.setViewport({width: 1024, height: 1366, deviceScaleFactor:2});

//------------------------------------

await page.screenshot({ path: "/integration-tests/screen_shot.jpg" });

await page.close();

await browser.close();

//------------------------------------

/*fs.writeFileSync(

"/integration-tests/tmp/" + process.argv[4] + ".html",

content

);*/

}

init()

.then(process.exit)

.catch((err) => {

console.error(err.message);

process.exit(1);

});